Should the world put a pause on AI? Future State 2023 speakers share their views

Is artificial intelligence out of control? Does the government, or the industry itself, need to stop it? We chat with some of the speakers of Future State 2023, which is taking place in Auckland next month, to gather their views.

Image licensed via Shutterstock

If you work in the tech or creative industries, you won't have been able to avoid the term 'AI' in recent months. From AI art generators like DALLE to Chat GPT, which Microsoft has spent hundreds of millions incorporating into Bing search and Office software, AI seems to be taking over everywhere.

It's not just creatives and techies who are affected, of course. It's affecting the whole of society. AI can now auto-generate convincing articles and reports, although they're often riddled with inaccuracies (sometimes referred to as 'hallucinations'). AI can also create fake photos and videos and even convincingly impersonate natural conversation to the degree that it's difficult to tell if you're speaking to a bot or a real human on the phone. And this pace of change is worrying a lot of people.

And we're not just talking about the normal fuddie-duddies who don't understand technology or are just scared of anything new. We're talking about the kind of tech giants who are broadly responsible for this kind of software emerging in the first place.

Specifically, last month the Elon Musk-funded Future of Life Institute (FLI) drafted a letter calling for a six-month pause on developing AI systems "more powerful than GPT-4" and to use that time to develop safety protocols. Otherwise, the letter said, governments should step in and enforce a moratorium.

The signatories, including Elon Musk, Steve Wozniak, Evan Sharp, Chris Larsen, Gary Marcus and Andrew Yang, said they were raising the alarm in fear that untrammelled AI development could cause "a profound change in the history of life on Earth". Specific concerns include the possibilities of AI propaganda, the loss of jobs, and a general fear that society could lose control. Around the same time, the Italian government imposed a ban on the processing of user data by OpenAI and initiated an investigation against ChatGPT.

What the experts think

So is this a storm in a teacup or a serious issue? Should AI be paused, either by the industry itself or by government action?

We expect this to be a hot topic at Future State 2023, a keynote speaker event presented by Spark Lab and Semi Permanent, which will bring some of the world's top innovators and business minds to Auckland, New Zealand on 11 May. So we asked four of them to share their current thinking, and we share their responses below.

Read on to find out what Danielle Krettek, founder of Google Empathy Lab, Constantine Gavryrok, global director of digital experience design at Adidas, Dr Jonnie Penn, professor of AI ethics and society at Cambridge University, and Sam Conniff, founder of The Uncertainty Experts, had to say.

Meanwhile, to see these experts speak live, along with Mark Adams, VP and head of Innovation at VICE, Alexandra Popova, senior director of digital production personalisation at Adidas and Mikaela Jade, CEO of Indigital, visit the Future State 2023 website.

"Democracy needs protecting."

We'll start with Dr Jonnie Penn, professor of AI ethics and society at Cambridge University, who believes there's a lot of misunderstanding about large language models such as Chat-GPT. "These systems have been called a 'calculator for words', but while this is a cute and exciting metaphor, it's also misleading," he explains.

"No matter how often you use a calculator, it doesn't change what a number is. Numbers remain fixed. Unfortunately, the use of these 'AI' models will pollute our everyday language in ways that are incredibly difficult to spot and cost money to fix. The consequences will be serious. Thankfully, though, that outcome is avoidable through strict regulation."

In short, he believes that the modern tech industry can no longer regulate itself. "In ancient Greece, so the proverb goes, engineers had to sleep under the bridges they built. The Open Letter is a reminder that Big Tech, Elon and co are in way over their heads. Since we can't put them under the bridges they want to build, we must turn to other experts – community leaders, labour leaders, and market authorities – to lead us on how to keep democracy vibrant and markets competitive while the technology is made safe."

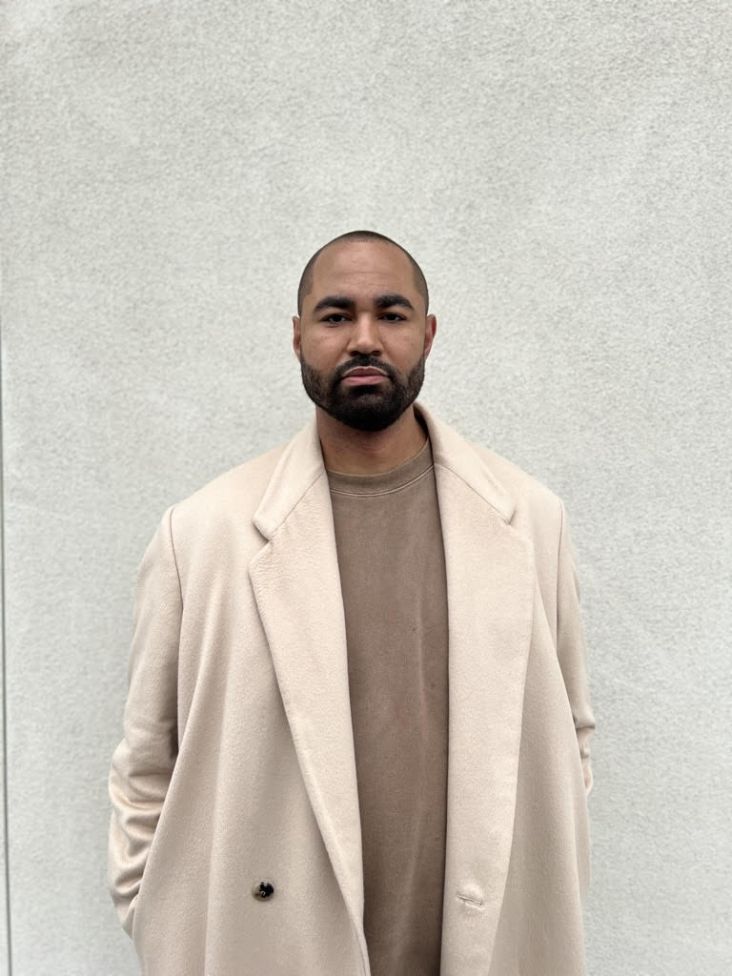

Dr Jonnie Penn, professor of AI ethics and society at Cambridge University

As an analogy, he points to the years it took our society to pull asbestos insulation out of homes, even after we learned it's carcinogenic. "In retrospect, it was a mistake to build with it," he says. "We've learned that lesson over decades and moved on. I think the looming legal and social ramifications of ChatGPT will cause many civic and industry leaders to be careful with what we build with this type of AI. It's a powerful and exciting toolset – I enjoy aspects of it myself – but it must be made sustainable. The errors it creates are not cutesy 'hallucinations'; they're just errors."

"I prefer legislation to pausing AI."

While Constantine Gavryrok, global director of digital experience design at Adidas, agrees that AI needs to be regulated, he's not sold on the idea of putting the brakes on its development. "Personally, as an early adopter first thing that comes to my mind is 'NO'; of course, we shouldn't slow down right now," he says. "At a moment when the whole topic is getting unprecedented traction on multiple levels, from governments and institutions to businesses, to individuals, we should probably not stop it. Even if we could."

Why not? "Because only with such traction do we get to learning more about the good, the bad and everything in-between, from successful use cases to failures, with that knowledge and data," he argues.

Constantine adds that he understands why different parties are trying to hit the pause button. "Even though AI has been around for decades, now it's reaching new capacities due to increased computing power. And that leads to fear and concerns, which are only amplified with the improved access and speed of delivering information. Nevertheless, being a part of the tech world, I feel we're well equipped to introduce desirable change."

Constantine Gavryrok, global director of digital experience design at Adidas

He doesn't, though, think that AI should be a free-for-all. "From a more practical perspective, I tend to side with the work on legislation by the European Union and proposed Artificial Intelligence Act that would mandate various development and use requirements. The main idea is that we need both to foster AI innovation and protect the public. While some of the details are still in consideration, you can find the current legislation here."

He explains that this legislation focuses on strengthening rules around data, quality, transparency, human oversight and accountability. "It's also aiming to address ethical questions and implementation challenges in various sectors – which Italy might be most concerned about, for instance – such as healthcare, education, finance and energy."

In short, the cornerstone of the Act Constantine is siding with is a classification system that determines the level of risk AI tech could pose to a person's health and safety or fundamental rights. "The framework includes four tiers and, in my opinion, provides acceptable – for the moment – guidance and regulations to move forward without halting the process."

"We need a long-term approach."

For his part, founder of The Uncertainty Experts Sam Conniff is flabbergasted by what he sees as the hypocrisy of the tech giants in proposing a pause.

"The godparents of technology attempting to put generative AI in detention sounds like parents telling their kids what they're dancing to 'isn't real music anymore'," he exclaims. "Sorry, geezers, but what was the ethical framework you had in mind when sewing the seeds of technology that would end up contributing to epidemic anxiety levels and harvesting personal data with such virtue?"

"And now, the generation who grew up looking up to you outpaces you with technology that threatens yours, and you call 'ethical concerns' on them," he continues. "Crikey, anyone would think you're the sort of megalomaniac who'd decide it's better to take a well-heeled few to an inhospitable planet over creating solutions for the down-at-heel many whose once hospitable planet looks increasingly in trouble."

Founder of The Uncertainty Experts Sam Conniff

At the same time, Sam agrees that AI's future needs to be considered carefully. "I do respect and recognise a call to consider the longitudinal and ethical implications of technological advances," he concedes. "But rather than focus the debate on highly experienced short-term thinkers, it might be wiser to look to examples of multi-generational thinking that exists widely in indigenous cultures; for example, they could take a leaf from the Blackfoot tribe's far-sighted approach to seven-generation planning.

"In short, a six-month go slow from the seemingly flustered self-interested elite looks like a continuation of more short-term thinking in a long-term world that pitches incredible and inspiring technology as something to fear, a well-known ruse of threatened powers to disquiet the populace. But, if the demand was more for a Citizens Assembly debate on the potential for the positive, generative force of AI, working in unison with all life systems, then that might be the sort of long-term thinking in our increasingly short-term world needs."

"We're at an inflexion point

It's pretty clear that whether or not a pause in AI development is attempted, most people in the industry are agreed on one thing. An unfettered, no-holds-barred approach of "let's just see what happens" is just not going to fly.

Best known for founding the Google Empathy Lab in 2014, Danielle Krettek Cobb has just left Google to co-found what she calls "a nonprofit, Grandmother AI". But she's certainly no uncritical cheerleader for the technology. Right at the frontline, she's aware of the issues that need to be tackled and believes discussion of a pause is more of a distraction than anything else.

"While taking a pause is far better than competitively racing ahead, it's a mind trick," she argues. "The real question of the moment isn't about buying linear time to become more 'prepared' or 'responsible'. The urgent question is how to prioritise the emotional and cultural aspects of intelligence training – how to expand this conversation to include critical new voices and more dimensional ways of seeing and being. This may not come from established companies, institutions and the social media voices already in this debate, but it could. It likely will come from the edges, the yet unheard and unperceived influences for ensuring a harmonious new era of AI tech.

"Vast vital data is missing from the education corpus of large language models: an emphasis on the best of emerging social science, our vast spectrum of cultural expressions, heart wisdom, and natural intelligence," she adds. "It hasn't been a priority for AIs to learn this: yet."

Danielle Krettek Cobb

But it doesn't have to remain this way. "I believe that if people are vocal, AI makers will respond," Danielle says. "I believe in the good of human beings, to act for us and the more than human world. We don't need to stop time to allow for a new, superstring alternate universe of development to open up. We're at an inflexion point where it's never been more pressing to look in the mirror of AI and turn towards our collective challenge with honesty and humility, to admit that these new models require new models."

Join the debate!

Want to join the discussion on these and other hot topics around the future of technological innovation? Presented by Spark Lab and Semi Permanent, Future State 2023 will be hosted at Spark Arena on Thursday 11 May, with around 1,300 people expected to attend.

With an international calibre of speakers, the one-day event will be curated to the theme of 'New Realities', which will explore the driving forces behind the next era of the internet and unpack the challenges and opportunities of developments such as the metaverse, data sovereignty, AI, VR, gamification and tokenisation, and UX and UI design. For full details, visit the Future State 2023 website.

by Tüpokompanii](https://www.creativeboom.com/upload/articles/58/58684538770fb5b428dc1882f7a732f153500153_732.jpg)

using <a href="https://www.ohnotype.co/fonts/obviously" target="_blank">Obviously</a> by Oh No Type Co., Art Director, Brand & Creative—Spotify](https://www.creativeboom.com/upload/articles/6e/6ed31eddc26fa563f213fc76d6993dab9231ffe4_732.jpg)

](https://www.creativeboom.com/upload/articles/59/5966589fcae3400b8bed99371b371395c485f42d_732.jpg)